10 Powerful eCommerce Data Collection Strategies & Examples

Data extraction transforms raw, scattered information into structured, usable datasets. Using AI, automation, and scalable techniques, companies can ensure accuracy, compliance, and efficiency that powers analytics, automation, and decision-making.

Table of Contents

Data is gold, we all know that. However, the question is how to sift the gold from the dirty ore. That is why we need data extraction. First, you need to identify your data extraction source. Next you figure out the data extraction technique that works best for you. Leveraging AI and automation helps you with data extraction, and you must stay updated on latest trends to maintain efficiency and accuracy. Or you could use a professional data extraction service to extract data.

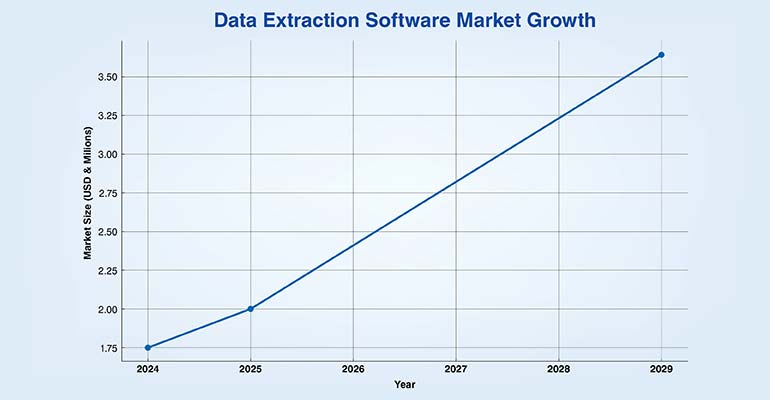

With data projected to hit 175 zettabytes globally data extraction can’t be ignored. To keep up with the data extraction demands the global data extraction market is growing rapidly at an estimated market size of $3.4 billion from 2025 to 2029. A 15.9% compound annual growth rate is phenomenal growth.

With 80% of data being unstructured and untapped, investing in automated data extraction techniques is really important.

Let’s explore what is data extraction, methods of data extraction and examples of real life data extraction.

Data extraction refers to the act of pulling raw data from different sources such as databases, websites, invoices, Excel spreadsheets, PDFs etc. The data pulled can be in different forms structured, unstructured or sometimes a combination of both. The extracted data is then standardized in a format as required. After this the data is centralized for storage which can be either onsite or in the cloud.

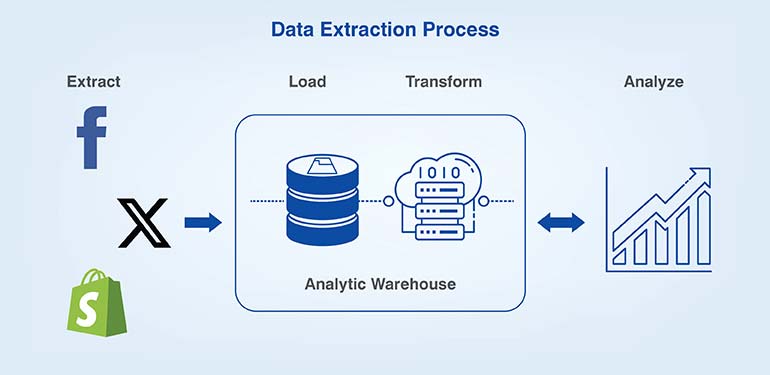

It’s important not to confuse data extraction with data collection. These two are very different processes. While data collection gathers data directly from its source (primary or secondary), data extraction utilizes data from a pre-existing source. Data extraction is the first part of ETL (Extract, Transform, Load) pipelines, extracting feeds from various data sources, transforming that data and then loading it to a relational database. It is the first layer that feeds processed raw data to all system and processes.

Many teams are currently using ELT (Extract, Load, Transform), instead of ETL which can be slow because ETL transforms the data on a separate server prior to loading. ELT loads the data and then transforms it, making it much quicker overall.

Without data, your decisions are based on hunches. Data can reveal patterns, identify insights, indicate risks and allow for quicker actions than others. It can even allow you to act before others have even seen the shift.

And how do you obtain that data? Through extraction. This is why it is so important – it unlocks a universe of insights.

Here’s the value it brings:

Ready to automate data extraction for accuracy?

Data extraction is dependent on the source and purpose for which you are extracting. Sometimes manual extraction will be sufficient. Other times you’ll need automation or smarter tools.

Web scraping is the method of extracting data from web data sources. It can mean a retailer monitoring the prices of products. Or a business following the competitor’s updates.

Basic scrapers use Python scripts or application programming interfaces (API). Dynamic websites may add additional layers of complexity since the content is generated using JavaScript as opposed to plain HTML. In those situations, you will need to use headless browsers. CAPTCHAs may also be an obstacle, requiring human intervention.

It’s important to follow robots.txt directives, site rules, and comply with the privacy laws. Ultimately, when done correctly, scrapping can provide more credible and reliable data than depending on 3rd persons or vendors.

Data extraction from structured sources involves systematically capturing and transforming information stored in databases, spreadsheets, CRMs, and ERP systems into usable formats for analysis and integration. Using APIs, SQL queries, and automation scripts, data is retrieved with precision and consistency.

This data extraction technique ensures schema compliance, metadata integrity, and referential accuracy across relational tables. It enables organizations to consolidate financial records, product catalogs, customer profiles, or clinical data without disrupting existing data architectures.

Advanced parsing logic and ETL pipelines streamline extraction from multiple structured environments, reducing manual intervention. The outcome is clean, validated, and query-ready data that supports downstream analytics, reporting, and AI model training.

Extraction via APIs enables direct, secure, and structured access to data from multiple digital platforms and enterprise systems. APIs provide standardized endpoints that facilitate controlled data retrieval without compromising system performance or security.

Through RESTful and GraphQL interfaces, data can be extracted in real time or batch mode based on business logic and authentication protocols. API-driven extraction supports pagination, rate limiting, and version management to maintain data consistency across updates. It ensures interoperability with CRMs, ERPs, financial systems, and eCommerce platforms.

Document and text extraction from OCR, PDFs, and images converts unstructured content into machine-readable data for processing and analysis. Optical Character Recognition identifies characters, tables, and key-value pairs from scanned or image-based files, maintaining layout accuracy and data hierarchy. The process includes pre-processing steps such as de-skewing, noise reduction, and text normalization to improve recognition accuracy.

Extracted data is validated through rule-based parsing and metadata tagging to ensure compliance and consistency. This method supports high-volume document workflows across invoices, contracts, medical records, and property documents.

Hybrid or intelligent extraction methods combine rule-based automation with machine learning and natural language processing to handle both structured and unstructured data. This approach adapts to varying document formats, data sources, and semantic contexts, ensuring higher accuracy and scalability.

AI models detect entities, classify content, and interpret context, while deterministic rules manage validation and exception handling. The integration of OCR, NLP, and API-based extraction creates a unified workflow that optimizes data capture from diverse inputs such as forms, PDFs, and transaction logs. Continuous learning mechanisms refine extraction models over time, reducing manual correction.

Selecting the right data extraction approach depends on the nature, scale, and operational context of the data source. Key factors include:

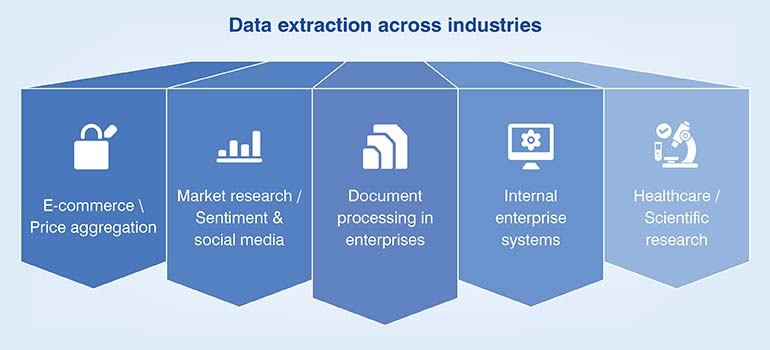

In real-world projects, data extraction is used across numerous industries and business functions to collect, organize, and analyze data from a variety of sources. It is often the first step in a larger data analytics, business intelligence, or machine learning project. Here are examples of data extraction from real projects, categorized by industry and application:

Data extraction is critical for modern business intelligence, but the process presents significant technical hurdles. Overcoming these common challenges requires robust, well-engineered strategies.

| Challenge Area | Problem | Best Practice |

|---|---|---|

| Diverse Data Formats |

|

|

| Data Quality Issues |

|

|

| Dynamic Data Sources |

|

|

| Scalability and Performance |

|

|

| Legal and Compliance |

|

|

| Complex Nested Structures |

|

|

Data extraction is evolving rapidly, driven by AI, real-time systems, and regulatory demands. Emerging technologies enable smarter, faster, and more compliant data acquisition across industries.

Data extraction is a critical process for transforming raw information from diverse sources into structured, actionable datasets. From structured databases to unstructured documents, organizations leverage techniques like API extraction, OCR, and hybrid methods to enable analytics, automation, and AI initiatives. Successful implementation requires careful planning, robust validation, and compliance adherence. As data volumes grow, intelligent, scalable, and context-aware extraction pipelines will be essential for maintaining accuracy, efficiency, and competitive advantage across industries.

What’s next? Message us a brief description of your project.

Our experts will review and get back to you within one business day with free consultation for successful implementation.

Disclaimer:

HitechDigital Solutions LLP and Hitech BPO will never ask for money or commission to offer jobs or projects. In the event you are contacted by any person with job offer in our companies, please reach out to us at info@hitechbpo.com

Leave a Reply