10 Powerful eCommerce Data Collection Strategies & Examples

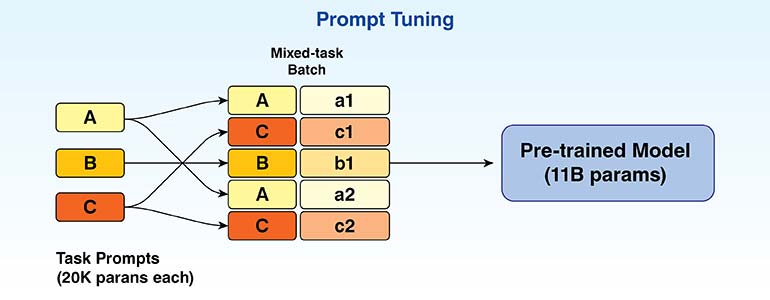

LLMs are good at handling general tasks, but specialized tasks typically need expensive model retraining. Prompt tuning addresses this by training small embeddings while keeping models frozen. Prompt fine tuning provide specialized performance effectively, without months of computation or high costs.

Large language models handle common applications like search and support just fine out of the box. But when you need one for a specific task or rigid workflow, that’s where things get tricky. Full fine tuning adjusts billions of parameters and will absolutely destroy your cloud budget. Plus, you’ll create a versioning nightmare that slows down your entire team. The problem is straightforward: we need a lightweight method to teach the model from our data without this massive overhead.

Prompt tuning gives you that lightweight path. Keep the base model frozen and only train a small set of learned prompts to get the behavior you want. You don’t need to copy the entire model or make risky weight changes. Training happens faster, the resulting artifacts are tiny, and you can easily roll back if an update fails. For most day-to-day tasks, it’s perfect because it adapts the model without forcing you to rebuild your entire stack.

There are many ways to adapt large models, and each comes with trade-offs between control and efficiency. We find prompt tuning sits in an ideal middle ground. It’s fast, cost effective and leaves the base model untouched. That’s an important advantage for maintaining a stable production environment. You’re teaching a machine that already understands language and how to do a specialized job. There are two kinds of prompts.

| Attribute | Soft prompts | Hard prompts |

|---|---|---|

| Interpretability | Low | High |

| Robustness | High | Variable |

| Storage footprint | Tiny | None |

| Readability | None | Textual |

There’s a simple rule of thumb: start with hard prompts (plain text) to validate your concept. Use hard prompts for initial clarity and prototyping. Use soft prompts for scalable production deployment. That should be your guiding principle.

Now let me clear the myth around why it matters the most. You’re training a small vector called a soft prompt. This component directs the base model’s behavior for your task, while the model itself remains unchanged. The only new element is the prompt. That makes this an elegant and efficient solution. The power of this simple concept lies in its practical application and scalability.

You have several options for prompt placement. The best choice depends on the specific problem you’re trying to solve.

When enterprises rely on a strong base model but face a flood of new tasks, full fine-tuning quickly becomes impractical. Training billions of parameters inflates costs, creates operational overhead, and may not even be allowed by vendors. Prompt tuning solves this by freezing the base model and training small, lightweight adapters. These “soft prompts” enable efficient adaptation, preserve general capabilities, and drastically reduce per-task costs.

Prompt tuning offers enterprises a practical, cost-effective path to scale AI across diverse workloads. By reducing training overhead, ensuring compliance, and keeping operations lean, it empowers teams to adapt quickly without compromising performance. For many organizations, it’s the smarter alternative to costly retraining—and the future of sustainable AI deployment.

Want to tune your prompts and make your LLMs smarter?

Prompt tuning is becoming increasingly popular compared to fine-tuning because it provides a quicker, more affordable, and more efficient method to fine-tune large language models to new tasks. Fine-tuning traditionally involves retraining billions of parameters with enormous compute, time, and budgets, usually unfeasible for most teams.

Prompt tuning, however, updates merely small task-specific embeddings while holding the base model constant. This light-weighting style allows it to roll out several domain-specific applications without training from scratch. The outcome is efficiency, reusability, and accessibility, making it possible for organizations to tap into specialized performance with few resources, resulting in its immediate uptake across sectors.

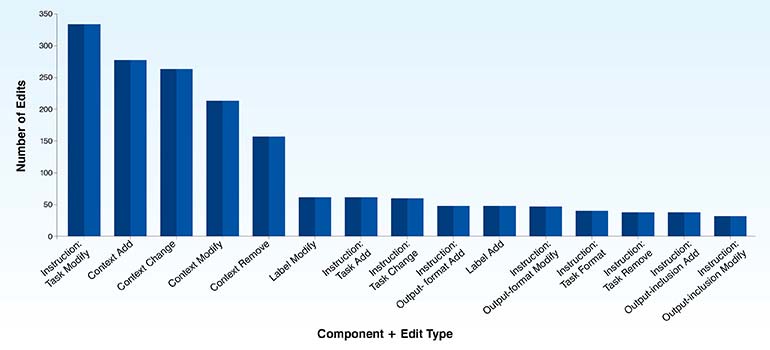

The most common types of edits applied are tuning the prompt in which the general meaning of the prompt remains consistent, followed by additions, changes where the meaning does not stay the same, removals and formatting.

The findings here underpin the criticality of tuning prompts to make them user-friendly and help prompt engineers to navigate the complexity of prompt design. This is also crucial as language models are becoming increasingly integrated into enterprise workflows.

Libraries like Hugging Face’s PEFT make the whole process straightforward, letting you graduate from simple prompt engineering to tuned prompts. Other methods like LoRA might be better for long form generation, but prompt tuning excels in fast setup and minimal storage requirements. It converts LLM adaptation from a massive, one-off research project into a repeatable, scalable process.

This is more than just basic tweaking. We’re strategically injecting the prompt signal right where it will be most effective. Think of it like adjusting a mixer: you choose where the signal goes and how strong it is. This decision depends entirely on your task, data availability and delivery timeline.

| Method | What it does | Why it helps | Best suited for | Cautions / Notes |

|---|---|---|---|---|

| Input Prompt Tuning |

|

|

|

|

| Prefix Tuning |

|

|

|

|

| Deep Prompt Tuning (P-Tuning v2) |

|

|

|

|

| Hybrid Methods |

|

|

|

|

Some quick tips

Prompt tuning begins with a frozen base LLM, where only small trainable prompts are optimized. You define prompt length and placement, then train using task-specific data. Unlike full fine tuning, only the prompt’s parameters update, keeping the model fixed. Training stability relies on validation monitoring, careful hyperparameters, and balanced dataset.

Once trained, the prompt file attaches at inference, guiding task-specific behavior efficiently without retraining the entire model.

Key considerations include:

Prompt tuning balances efficiency and control, enabling targeted LLM adaptation with minimal overhead—streamlining deployment while retaining stability, scalability, and strong performance across diverse tasks.

Successfully adopted prompt tuning balances efficiency, accuracy, and scalability. It uses lightweight embeddings to specialize large models without retraining, achieves consistent task performance across domains, minimizes compute costs, and ensures reusability. It leads to faster deployment, precise outputs, and adaptability to evolving business or research needs.

When you have one model but a dozen different tasks, prompt tuning is your most effective approach. We use a small, dedicated prompt for each task and leave the main model untouched. This helps us rapidly roll out updates and new capabilities. We only use heavier tools like adapters when we absolutely must. Now let’s check out on how to choose the right prompt tuning tool.

Prompt tuning is our default choice for most common tasks because it’s lightweight and flexible. For more complex jobs like named entity recognition or parsing unstructured documents, prefix or deep prompts are probably what you need.

Add LoRA or a hybrid setup when you need more power but a full retrain is off the table due to budget constraints. Nothing beats full fine tuning for mission critical tasks where every point of accuracy matters, but these scenarios are rare in practice. When dealing with rapidly changing information, you should combine prompts with retrieval augmented generation (RAG) to make sure you have factual accuracy.

For initial exploration, always start with text prompts. Once you find a pattern that works well, formalize it as a trainable soft prompt.

We adhere to a default strategy. Prompt tuning for scalable tasks, prefix or deep prompts for difficult NLU jobs, LoRA when more capacity is needed and full fine tuning only when a budget owner mandates it. This pragmatic approach has proven effective.

| Method | Trained params per task | Serve-time footprint | Strengths | Gaps |

|---|---|---|---|---|

| Prompt tuning | ~0.01–3% | Base + small prompt |

|

Lower interpretability than text |

| Prefix / deep prompts | 0.1–3% | Base + per-layer states |

|

More tuning knobs |

| LoRA | ~0.1–1% | Base + low-rank adapters | Higher capacity within PEFT | Heavier than pure prompts |

| Full fine-tuning | 100% | One model per task | Maximum task control | High cost and storage |

| Few-shot in context | 0% | Base only | Instant trials in the prompt window |

|

| Prompt engineering | 0% | Base only |

|

Stability varies by task |

Prompt tuning works particularly well for chatbots with well-defined tasks, like document summarization or PII redaction. It’s also an excellent tool for rapid prototyping when you need to demonstrate a concept quickly.

| Use Case | Your Data | Prompt Pattern | What You Get | How to Deploy |

|---|---|---|---|---|

| Enterprise NLP Pipelines |

|

|

|

|

| Chatbot Adaptation |

|

|

|

|

| Custom Document Summarization |

|

|

|

|

| Academic Research |

|

|

|

|

Building a prompt tuning workflow requires a structured approach from defining objectives and preparing balanced data to designing, training, testing, and deploying prompts with continuous monitoring for evolving business needs.

Adapt faster, deploy smarter, and achieve more with less compute.

A well-designed prompt tuning workflow ensures efficiency, adaptability, and consistent performance helping your models stay reliable, cost-effective, and aligned with changing business goals.

The main benefits of prompt tuning are fast training and trivial swapping of tasks. Operationally the key advantage is that the base model remains untouched, ensuring stability and protecting it from any experimental changes.

Soft prompts are non-interpretable vectors, so robust logging and evaluation are important to understand their behavior. They can also overfit to strange patterns in your data, so proper data splitting and careful training are necessary. And avoid trying to merge too many tasks into a single prompt.

Always clean your data, restrict prompt access for privacy and maintain a human in the loop for compliance in sensitive domains.

| Framework | Supported Methods | Key Features |

|---|---|---|

| HuggingFace PEFT | Prompt, Prefix, LoRA | Common backbone integration |

| OpenPrompt | Templates, Verbalizers | Training loops, Plug-and-play tasks |

| Ops add-ons | Registry, Dashboards | Cost/quality tracking |

No matter which tool you choose, just make sure it gives you robust experiment tracking, a central prompt registry and a dashboard for monitoring performance and cost.

Prompt tuning is a practical and scalable method for adapting LLMs without crazy costs. We train small, focused prompts while keeping the base model frozen. You can iterate faster, reduce compute bills and deploy new capabilities in a reasonable amount of time.

This gives us a major operational advantage. A shared prompt library helps you reuse and update work across different projects, improving consistency. This approach also satisfies audit and compliance requirements without slowing down development. You get the specific task control you need without the massive overhead of traditional fine tuning.

So, here’s what you do.

You need to monitor performance continuously. Be prepared to update your prompts as your data and business needs change, because they will. Prompt tuning isn’t a silver bullet, but it’s a powerful, scalable tool that aligns with the practical realities of building and maintaining AI driven products.

What’s next? Message us a brief description of your project.

Our experts will review and get back to you within one business day with free consultation for successful implementation.

Disclaimer:

HitechDigital Solutions LLP and Hitech BPO will never ask for money or commission to offer jobs or projects. In the event you are contacted by any person with job offer in our companies, please reach out to us at info@hitechbpo.com

Leave a Reply