10 Powerful eCommerce Data Collection Strategies & Examples

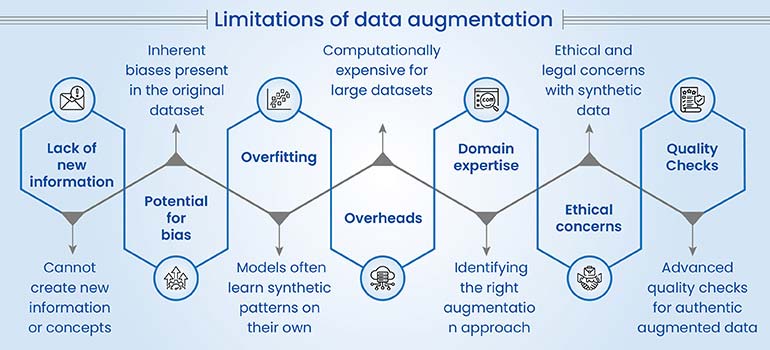

Data augmentation helps machine learning projects overcome scarce data, reduce overfitting, and improve model accuracy. It saves time, cuts costs, and boosts model performance when applied with the right techniques and expertise.

Table of Contents

Machine learning – ML algorithms or Artificial Intelligence – AI models, their performance is only as good as the data they learn from. Accurate and huge datasets are the foundation to robust training datasets but collecting voluminous data is costly, time-consuming and impractical at times.

This is where data augmentation technique proves to be a smart move. By programmatically transforming existing datasets using image rotations, text paraphrasing, or audio pitch shifts; data augmentation enables creation of new, diverse training examples for the ML models without incurring additional cost of data collection.

In this guide, we’ll cover what is data augmentation, its techniques, benefits, challenges, limitations and data augmentation examples. So, get ready to take a deep dive into the world of data augmentation and what it offers to AI and ML models.

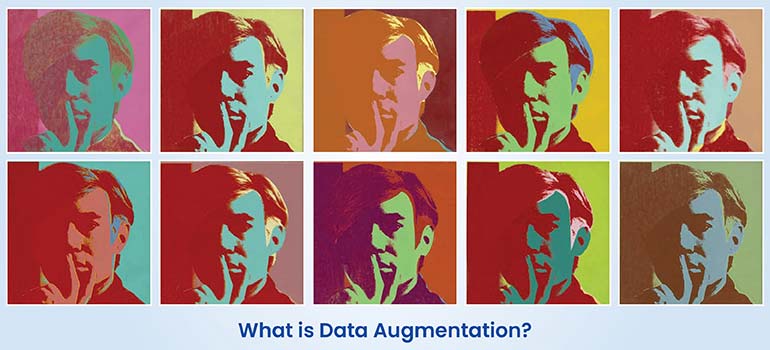

Data augmentation is how we artificially generate new data from what we already have. It’s a great way to improve deep learning performance, especially when the original dataset is small or overfitting is a problem. The technique just makes small changes to existing data, which in turn increases the dataset’s size and diversity.

Simple changes like cropping, rotating, flipping images, adding noise to audio or rephrasing text expose models to variations that improve accuracy and helps with overfitting prevention.

To really get it, you need to understand the difference between data augmentation and synthetic data. They both enrich data, but augmentation just modifies existing data while synthetic data creates brand new data points. Think of it this way: augmentation adds variation, but synthetic data generates something entirely new using algorithms.

Use cases include identifying objects from various angles, helping audio models understand language in noisy conditions and aiding motion tracking in video. For tabular data, it helps handle imbalance for better predictions.

Data augmentation helps AI and ML models to learn and generalize effectively and perform better by making the most of the data we have. Let’s look at a few reasons why it’s so important for driving high performance.

Benefits of data augmentation include

Data augmentation spans a wide range of data types, and each needs its own specialized techniques. Whether you’re training a convolutional neural network for image recognition, an NLP model for sentiment analysis, or a speech-to-text system, understanding and applying the right data augmentation technique decides whether you will have a fully functional model or a non-performing one.

Let’s look at how we apply augmentation across image, text, audio, video and tabular data.

| Data Type | Common Techniques | Example Use Cases |

|---|---|---|

|

Image

|

Rotation

Flipping

Cropping

Noise Injection

Color Jitter

|

Medical Imaging

Object Detection

|

|

Text

|

Synonym Replacement

Back-Translation

Tokenization

Stopword Removal

Named Entity Recognition

|

Chatbots

Sentiment Analysis

|

|

Audio

|

Noise Addition

Pitch Shifting

Time Stretching

MFCC Extraction

Silence Removal

|

Voice Recognition

Speech Emotion

|

|

Tabular

|

SMOTE

CTGAN

Normalization

One-Hot Encoding

Imputation

|

Fraud Detection

Customer Segmentation

|

|

Video

|

Frame Sampling

Optical Flow

Temporal Smoothing

Background Subtraction

Object Tracking

|

Surveillance

Action Recognition

|

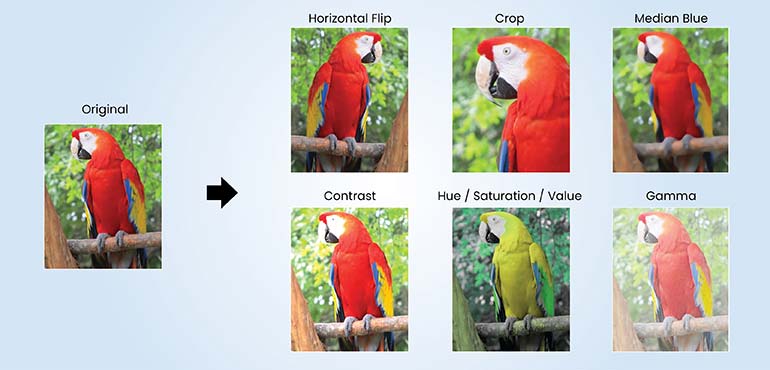

This technique is how we artificially expand the training dataset for computer vision models. We apply different transformations to existing images—like rotations and flips—to create new training data. These changes simulate variations you’d see in real world images, which makes the model more resilient when it’s deployed.

Some of the common techniques for image data augmentation include

Real-world examples of image data augmentation

Medical imaging: For rare conditions, there aren’t enough labelled images. In these cases, techniques like rotation, scaling and elastic transformations can simulate different scans to help us train more robust diagnostic models.

Autonomous driving: Models often fail because of lighting issues or different angles. In this scenario, techniques like brightness adjustment prepare self-driving models for situations like night driving or glare.

Benefits of image data augmentation

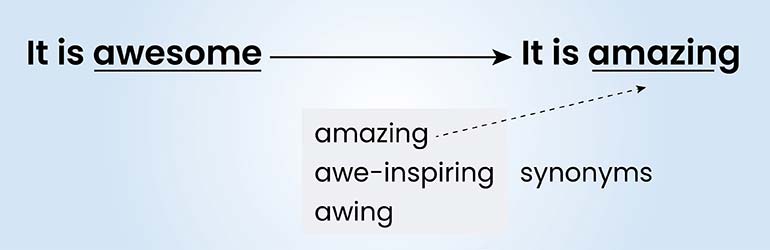

This technique creates new text samples by making small changes to existing text. It’s a simple way to improve the diversity and size of a text dataset while keeping the original meaning intact. This is especially helpful for NLP tasks where labelled data is limited because it improves model generalization.

Some of the common techniques for text data augmentation include

Real-world examples of text data augmentation

E-commerce product review: Sentiment models can struggle with informal expressions. When that happens, we can substitute a synonym. For example, we might replace ‘a great purchase’ with ‘happy with what I bought’, which is easier for the model to understand.

Virtual assistants: Often, when bots fail to understand a particular expression, back translation or word substitution helps.

News classification: If you have limited labelled articles for each category, you can use sentence shuffling to create new headlines and generate more samples.

Benefits of text data augmentation

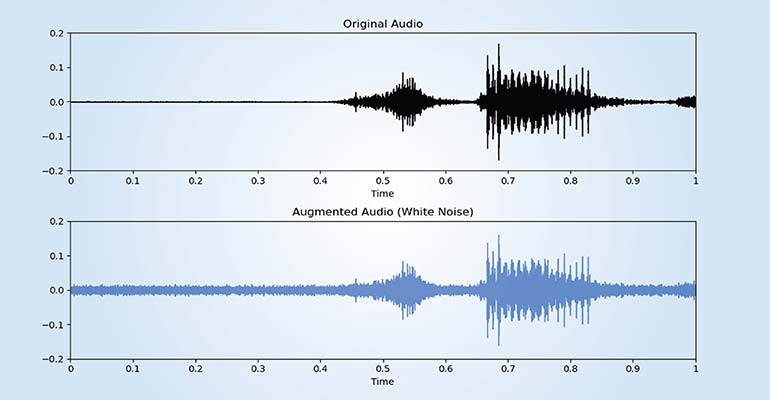

Audio data augmentation is a technique that artificially increases the size and diversity of an audio dataset. We do this by creating modified versions of existing audio samples. This process helps machine learning models generalize better and become more robust to variations in real world audio, helping them do better in noisy environments.

Audio data augmentation techniques

Real-world examples of audio data augmentation

Voice assistants (e.g., Alexa, Google Assistant): These assistants often struggle to recognize voices in noisy places. So we train the models to work in those environments by adding background noise to the training data.

Speech recognition (ASR Systems): Limited accents or speaking styles in training data can be a problem. Pitch shifting or speed changes can simulate a variety of voices to improve general recognition.

Benefits of audio data augmentation

This technique enhances machine learning models by increasing the size and diversity of tabular datasets. It’s especially useful when we’re dealing with missing values or limited, unbalanced data. It works by generating or modifying structured data to improve model training, which helps in industries like finance, healthcare and fraud detection.

Key techniques used in tabular data augmentation

Real-world examples of tabular data augmentation

Fraud detection: It’s often hard to get enough data for models to work well because there are so few fraudulent transactions compared to normal ones. This is where SMOTE or GAN based synthesis comes into balance datasets and improve anomaly detection.

Customer churn prediction: If the training data has limited churn cases, it’s tough for the model to spot potential churners. Techniques like SMOTE and feature swapping help train the model to do a better job.

Benefits of tabular data augmentation

This technique simulates real world variations by applying transformations to video frames. It works on existing video clips to artificially expand video datasets, which helps improve model performance. By preserving both spatial and temporal information, the technique helps models with jobs like tracking and surveillance.

Key techniques include

Real-world examples of video data augmentation

Video surveillance: Often, low quality or incomplete footage is a challenge for a proper investigation. Synthetic frame generation helps with detection and classification even in poor conditions.

Action recognition: In sports or surveillance, models often struggle to catch speed and partial views. Speed variation, reversing or temporal cropping helps the model learn diverse patterns of human action.

Benefits of Video Data Augmentation

Empower your training datasets with expert augmentation.

Data augmentation is a cornerstone technique used by industry leaders to enhance AI performance, reduce bias, and improve model generalization.

These real-world use cases highlight how strategic data augmentation enables AI systems to generalize better and reduces bias. It also shows how augmentation improves scalability, accuracy, and real-world usability of AI.

Using data augmentation isn’t a tough task, but you need to be crystal clear on your needs and follow a streamlined approach. Here are a few tips to help you get accurate results from your data augmentation tasks.

The future of data augmentation is all about aggressively tackling data limitations to improve model performance. We’re going to see more advanced machine learning techniques, like generative adversarial networks (GANs) and self-supervised learning, creating even more sophisticated and realistic synthetic data.

The rise of generative AI is a game changer. It’s helping create synthetic data and content, which opens new possibilities across all kinds of industries. Its applications are huge, from art and entertainment to healthcare and education.

We’ll see machine learning algorithms automate the data augmentation process itself, learning the best transformations for specific datasets and tasks. This will lead to more effective and diverse augmented data and optimize the whole training process.

Self-supervised learning (SSL) and reinforcement learning (RL) are being integrated more to enhance learning in complex environments, especially where labeled data is hard to come by.

Future research will focus on developing efficient and low power augmentation methods for edge devices and IoT applications, where you just don’t have a lot of computational resources.

So, to wrap things up, it’s clear that efficient machine learning model needs good quality data. That’s non-negotiable, both in quality and quantity. But collecting huge volumes of data costs time and money. Data augmentation is the best way to tackle this challenge, letting us create artificial data from what we already have. You can use different techniques depending on your task to get the best results.

But you need to have a clear understanding of your requirements and the expertise to choose the right technique. If you thoughtfully apply these techniques and follow industry best practices, you can get the full potential out of your models even when data is scarce. And if you don’t have the expertise in-house, you can always get support from data augmentation experts.

What’s next? Message us a brief description of your project.

Our experts will review and get back to you within one business day with free consultation for successful implementation.

Disclaimer:

HitechDigital Solutions LLP and Hitech BPO will never ask for money or commission to offer jobs or projects. In the event you are contacted by any person with job offer in our companies, please reach out to us at info@hitechbpo.com

Leave a Reply