5 Powerful Ways Image Annotation Improves Computer Vision

Data annotation is vital for machine learning and AI, enabling these systems to interpret raw data. Its importance will grow as AI potential is further explored. Let’s understand the facets of data annotation critical to effective machine learning and deep learning algorithms.

From rudimentary notations to sophisticated data-enhancement techniques, data annotation has had a fascinating journey across attempts to structure and extract insights from data. Initiatives like the Semantic Web sought to add metadata to web content so that machines could understand and process information more effectively.

Today, annotation has gained unprecedented importance due to the immense rise of AI and Machine Learning. By 2026, almost every enterprise is expected to incorporate some form of AI into its operations, underscoring the need to devote greater effort toward building capabilities in data annotation.

As AI technologies continue to advance, the demand for high-quality annotated data has soared. The annotation market is expected to grow at an anticipated compound annual growth rate (CAGR) of 26.5% from 2023 to 2030. This growth is projected to culminate in a substantial market value of USD 5,331.0 million by 2030.

Annotation is a broad concept, and several elements and factors define its character. Without looking at these facets of annotation, it is infeasible to serve the needs of your machine learning and deep learning algorithms.

Table of Contents

There are two important dimensions that help in understanding data annotation. First, its nature, and second, its role. In terms of nature, data annotation is the process of enriching raw data, which comprises affixing labels, tags, or markers to unstructured data. In this way, it provides the ground truth needed to train AI and machine learning models.

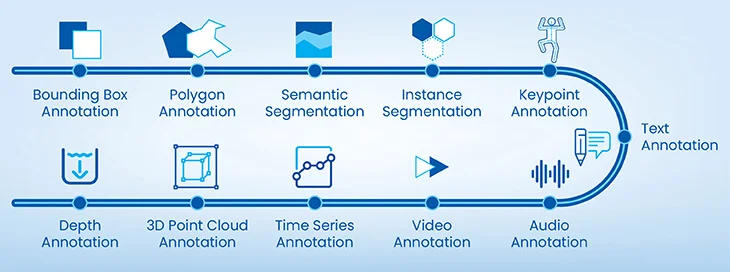

Depending on the nature of the data and the objectives of the AI or machine learning project, data annotation can take various forms. So, it’s a broad term that encompasses annotation forms such as image annotation, video annotation, audio annotation, and text annotation.

Data annotation is pivotal in elevating the quality and utility of datasets. The annotated data becomes the bedrock upon which algorithms can train and become more adept at discerning intricate patterns and making precise predictions. Speaking of other ways data annotation helps, it mitigates biases and inaccuracies that may be present in the original raw data. In a way, data annotation is the bridge between raw data and insights essential for sound decision making.

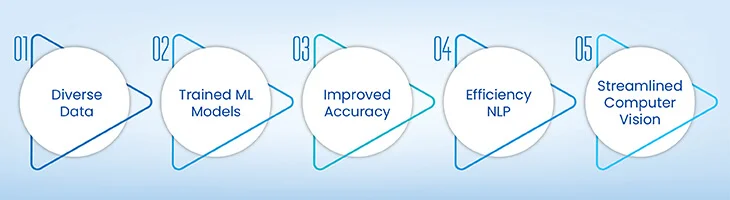

Today, when AI and machine learning are on the agenda of most CEOs and CTOs, data annotation has become a key activity in the entire data processing pipeline that feeds into algorithms. Data annotation drives AI and ML implementation by:

Annotations extend to various types of data, including text, audio, video, and even more complex forms, such as 3D images or medical records. For instance, in medical imaging, annotations are used to identify and classify structures within images, such as tumors, organs, or anomalies.

No machine learning models can be trained without data annotation, as models learn from labeled data to perform their function (prediction, classification, etc.). If we are to consider recommendation systems, then user preferences (annotated as likes, dislikes, ratings) train the model to suggest relevant items. Here is a classic case to make you understand this concept better where high-performance image training dataset helped a Swiss food waste management company to build an accurate machine learning model.

Well-annotated data is essential for building precise models, as it reduces errors and thus improves the model’s ability to produce accurate results. Therefore, in autonomous vehicles, accurate annotation of objects in the environment will only ensure that the vehicle is making safe decisions.

In natural language processing (NLP), text annotation is critical for executing tasks like sentiment analysis, named entity recognition, and part-of-speech tagging. As in sentiment analysis, text samples are annotated with labels indicating positive, negative, or neutral sentiment, which offer input to models to understand and categorize sentiments expressed in text.

Object detection, image segmentation, and facial recognition rely heavily on annotated data. Video and image annotations come into the picture to build and provide a training dataset for these CV algorithms. For instance, in facial recognition, annotated images provide key points (eyes, nose, mouth, etc.) that help the model identify and differentiate individuals.

The possibilities of using data annotation are growing limitless due to the unprecedented form data is taking. Some of the industries where data annotation has been demonstrating its significance are:

Medical and Healthcare: Data annotation aids in diagnosing diseases and optimizing healthcare delivery through the labeling of medical images and electronic health records. NVIDIA Clara comes forward as a name providing AI-powered healthcare solutions relying on data annotation.

Autonomous Vehicles and Transportation: Annotation is crucial for enabling the safe navigation of autonomous vehicles by identifying objects, lane markings, and road conditions. Waymo and Tesla train their autopilot systems to build autonomous capabilities using data annotation.

eCommerce and Retail: Annotations categorize and attribute products, enhancing search functionality and recommendation systems for online shopping. Amazon, eBay, and Alibaba Group, directly or indirectly, leverage data annotation to perform these functions.

Finance and Banking: Annotations of financial transactions assist in identifying suspicious activities and potential fraud, while sentiment analysis helps in market research. Specializing in fraud detection, Feedzai labels financial transactions to train its predictive models.

Gaming and Entertainment: Annotations support character and object recognition in video games, virtual reality environments, and interactive gaming experiences. As a necessary step, data annotation is proving quite important for companies like Sony and Ubisoft in character animation and offering interactive gaming experiences in VR-based games.

Agriculture: Data annotation aids in crop monitoring and weed detection, helping to assess crop health and predict yield for efficient farming practices. The process has become a part of AI-driven crop monitoring and yield prediction for stalwarts in the field, such as Trimble Agriculture, John Deere and AgShift.

Harness the power of quality data annotation for high performing AI models.

Annotation has many challenges and is not an easy exercise. When annotators don’t take the right course for addressing a challenge, it leads to the failure of an entire AI and machine learning project. Some challenges typically encountered in the data annotation process are:

| Data Annotation Type | What does it do |

|---|---|

| Text Annotation | Description Adding labels or tags to textual data for the purpose of training machine learning models. Important for categorizing or tagging segments of text to execute tasks like sentiment analysis and named entity recognition. |

| Image Annotation | Description Marking and labeling objects or regions in images to train machine learning algorithms in recognizing and understanding visual elements. Used in tasks such as object detection, image segmentation and image classification. |

| Audio Annotation | Description Labeling or transcribing audio data to train models in tasks such as speech recognition, sentiment analysis, or any application where understanding spoken language is important. |

| Video Annotation | Description Annotating frames or segments in a video to identify and track objects or actions, enabling machine learning applications like action recognition, object tracking, and more. |

| Audio-to-Text Annotation | Description Converting spoken language into written text to train models for tasks such as transcription services, voice assistants, and any application that requires the conversion of speech to text. |

The efficiency of annotation implementation rests on the technique(s) implemented for a given annotation project. From among the techniques mentioned below, annotators select those that best suit their project.

Struggling with complexities of data annotation? Turn challenges into triumphs.

A range of tools are available for performing annotation, and annotators always choose those that best fit a given project. Some of the widely used tools annotators commonly use are:

Following established guidelines ensures that annotations are consistent and accurate across the dataset. By assimilating the following best practices in your data annotation process, you will preserve the quality of data and bring out the best outcomes from AI and ML models.

We accelerated data annotation timelines and significantly improved the AI model performance of a German construction technology company. Our client is from the real estate sector and sought to capture, validate, and verify information from multi-lingual online publications. Our data annotation teams completed the project in record time, within budget, while enhancing outcomes.

The data annotation pipeline, apart from preparing the data to suit the algorithmic needs, also extends to algorithmic execution, as it determines model accuracy. We examine each step that forms a part of this workflow.

Onboard a tool after evaluating it against the following points. If it meets each criterion, then you can successfully start implementing.

Human annotators are essential in refining data for machine learning models. Their expertise guarantees precise and relevant annotations. Proficient annotators contribute domain insight, nuanced judgment, and contextual understanding, which are important for the model’s proficiency. Therefore, despite the rise of use of automation in annotation, human annotators must be present in the loop.

Humans possess the ability to comprehend subtleties, cultural context, and ambiguous language, which are crucial for tasks like sentiment analysis or complex categorization. Moreover, human annotators can adapt swiftly to novel data, while automated systems struggle with unforeseen variations. Ethical considerations, such as sensitive content, also necessitate human judgment.

Don’t forget, that the quality of the training dataset you use for your ML models determine its performance. Leveraging some of the most effective ways to label data can get you high-quality training data. It ultimately helps your AI/ML models to make precise decisions that empower you to grow profitably.

The arrival of new concepts is shaping data annotation, signifying a shift towards more sophisticated applications. Some of the trends bringing about change are:

To sum up, data annotation is a critical component in the field of machine learning, enabling AI systems to understand and interpret raw data. It involves the process of labeling or tagging data, which can be in various forms, such as text, images, or audio, to make it meaningful for AI models.

Various tools and strategies can be employed for data annotation, each with specific use cases and potential future directions. And their complexity underline the need for expertise in this area, whether it’s through professional services or a thorough understandin of the best practices in the industry. As we continue to explore the potential of AI, the role and significance of data annotation will without doubt continue to grow and take center stage in strategies for ML model development.

What’s next? Message us a brief description of your project.

Our experts will review and get back to you within one business day with free consultation for successful implementation.

Disclaimer:

HitechDigital Solutions LLP and Hitech BPO will never ask for money or commission to offer jobs or projects. In the event you are contacted by any person with job offer in our companies, please reach out to us at info@hitechbpo.com

Leave a Reply