10 Powerful eCommerce Data Collection Strategies & Examples

Entity annotation, crucial for training effective ML models, faces challenges like subjectivity and handling complex text. BERT, with its contextual understanding and adaptability, improves annotation quality and efficiency, revolutionizing NLP applications.

In the machine learning landscape, entity annotation – the process of identifying and labeling key elements within text data – is critical for training high-performing models. It serves as the backbone for sentiment analysis, information extraction, and question answering tasks. This makes it an essential step in leveraging the power of unstructured textual information. But this is not as easy as it seems.

Table of Contents

To handle these text annotation challenges, Bidirectional Encoder Representations from Transformers (BERT), the pre-trained language model, was introduced. Since then, it has significantly advanced the field of Natural Language Processing (NLP). BERT’s unique ability to grasp the context of words within a sentence positions it as an invaluable tool for enhancing both the accuracy and efficiency of entity annotation for ML models.

In this article, we will talk about the ways BERT is transforming entity annotation, leading to more precise and effective machine learning models across various domains.

Entity annotation is all about identifying and labeling significant elements within text data. These elements, known as entities, can be people, locations, organizations, dates, monetary values, etc. Entity annotation is crucial for training powerful machine learning models. It empowers models and algorithms to perform tasks like sentiment analysis, information extraction, and question answering by providing a structured understanding of the underlying text data.

Definition and Role

Entity annotation is the process of meticulously labeling specific elements within text, such as names, places, dates or numerical values. This structured labeling empowers machine learning models to recognize and extract crucial information from unstructured text data, enabling tasks like sentiment analysis, machine translation, and information retrieval.

Types of Entities

Entities encompass everything from named entities (people, organizations, locations) to numerical entities (dates, times, quantities), and even more specialized categories like medical or legal entities.

Challenges in Traditional Methods:

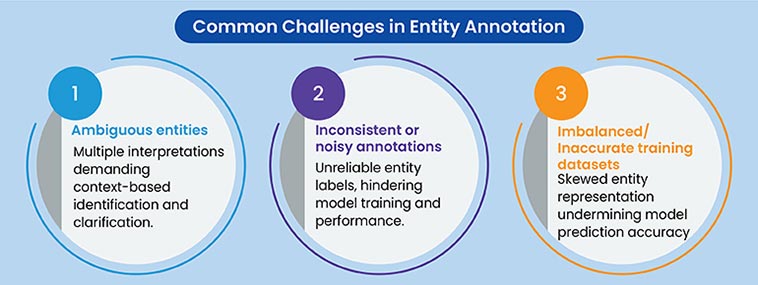

Traditional entity annotation methods, often relying heavily on human annotators, are fraught with challenges:

These challenges underscore the urgent need for innovative approaches to entity annotation, capable of addressing the limitations of traditional methods and empowering the development of more accurate and robust machine learning models.

BERT, as a revolutionary pretrained language model, has significantly enhanced the capability of machines to comprehend the nuances of human language. BERT’s innovative architecture and training methodologies allow it to capture contextual information, handle long-range dependencies, and leverage transfer learning, boosting it to understand natural languages effectively and efficiently.

BERT’s architecture and functioning

BERT’s architecture is built on the Transformer, a neural network architecture renowned for its ability to process sequential data efficiently. The model employs a bidirectional approach, allowing it to consider both the preceding and following words when understanding the meaning of a specific word in a sentence. This empowers BERT to capture the context of words comprehensively, enabling a deeper understanding of language.

How BERT captures contextual information

BERT’s bidirectional nature enables it to grasp the contextual meaning of words. It analyzes the relationships between words in both directions, considering the surrounding words to determine the precise meaning of a particular word. This contextual understanding empowers BERT to excel at various natural language processing tasks, including entity annotation, in which the meaning of an entity can be influenced by its context.

BERT’s ability to handle long-range dependencies

The Transformer architecture at the heart of BERT allows it to effectively handle long-range dependencies within text. It can establish connections between words that are far apart in a sentence, capturing complex relationships and nuances. This capability is crucial for accurately identifying and classifying entities, as the context of an entity may span multiple words or phrases within a sentence.

What is transfer learning in BERT

BERT utilizes transfer learning, a technique that enables the model to leverage the knowledge acquired from pre-training on massive amounts of text data. This pre-trained knowledge serves as a foundation for fine-tuning BERT to specific tasks, such as entity annotation. By transferring knowledge from pre-training, BERT achieves impressive performance, even with limited task-specific training data.

BERT’s innovative architecture, coupled with its ability to capture context, handle long-range dependencies, and leverage transfer learning, has positioned it as a breakthrough in natural language understanding. Its prowess in comprehending the intricacies of language holds immense potential for enhancing the accuracy and efficiency of entity annotation for ML models.

BERT’s advanced language understanding capabilities have significantly elevated the quality of entity annotation for ML models. By leveraging its contextual understanding, accuracy improvements, efficiency gains, and adaptability, BERT has transformed the way entities are identified and labeled.

Enhanced Contextual Understanding

Improved Accuracy

Increased Efficiency

Adaptability to Different Domains and Languages

BERT’s profound impact on entity annotation quality has revolutionized the way ML models are trained. Its contributions to enhanced accuracy, efficiency, and adaptability empower annotators and researchers to create more reliable and effective machine learning systems across various domains.

BERT’s capabilities extend beyond theoretical advancements, finding practical applications and revolutionizing how ML models are trained and deployed across diverse domains.

Named Entity Recognition (NER)

Relation Extraction

Event Extraction

Other Entity Annotation Tasks

BERT’s practical applications in entity annotation span a wide array of tasks. Its contributions enhance accuracy, efficiency, and adaptability, making it an invaluable tool for empowering ML models with deeper language understanding and improved performance across various domains.

Harnessing the full potential of BERT for entity annotation requires adherence to best practices. These practices encompass data preparation, fine-tuning, evaluation and integration to ensure optimal performance and efficiency.

Data Preparation and Preprocessing

Fine-tuning BERT for Specific Tasks

Model Evaluation and Optimization

Integration with Annotation Tools

By adhering to best practices for data preparation, fine-tuning, evaluation, and integration, BERT’s potential for enhancing entity annotation can be fully realized. The combination of human expertise and BERT’s advanced language understanding capabilities empowers ML models to achieve exceptional accuracy and efficiency in entity recognition tasks.

The evolution of BERT and its integration with other technologies are all set to shape the future of entity annotation, driving innovation and impacting the annotation industry in significant ways.

Advancements in BERT and similar models

Combining BERT with other techniques

Impact on the annotation industry

The future of entity annotation is intertwined with the continued evolution of BERT and the broader field of natural language understanding. By staying abreast of these trends, AI and ML companies can harness the full potential of entity annotation, empowering their models to achieve unprecedented accuracy and efficiency in understanding and extracting valuable insights from text data.

No second thought that BERT has emerged as a transformative force in entity annotation, significantly enhancing the quality and efficiency of the process. Its contextual understanding, accuracy improvements, increased efficiency, and adaptability empower AI and ML companies to unlock the full potential of their models. By adopting BERT, companies can significantly improve the performance of their natural language processing applications, leading to more accurate and insightful results.

The future holds even more exciting possibilities, with advancements in BERT and related models, as well as integration with other techniques, promising to further revolutionize entity annotation and its impact on the AI and ML landscape. As research and development in this field continues to progress, we anticipate even more sophisticated and efficient methods for entity annotation, paving the way for a new era of language understanding and intelligent applications.

What’s next? Message us a brief description of your project.

Our experts will review and get back to you within one business day with free consultation for successful implementation.

Disclaimer:

HitechDigital Solutions LLP and Hitech BPO will never ask for money or commission to offer jobs or projects. In the event you are contacted by any person with job offer in our companies, please reach out to us at info@hitechbpo.com

Leave a Reply