10 Powerful eCommerce Data Collection Strategies & Examples

Accurate data annotation for ML projects is critical for developing efficient models. Key strategies include refining annotation schemas, prioritizing quality control, addressing bias, optimizing workflows, and leveraging automation. These improve model performance by increasing accuracy, efficiency and ethical considerations in carrying out data annotation.

Table of Contents

High-quality, precisely labelled data serves as the foundation for successful machine learning models. Data annotation for ML projects provides the ground truth for these models to learn from. The clearer the labels, the better the algorithm can learn and perform its intended tasks.

To put the importance of data annotation in perspective, consider that the global data annotation tools market is projected to reach $3.4 billion by 2028. And this doesn’t even consider the hundreds of data annotation teams, training operations, development projects, and the massive amount of capital that is invested in data annotation for AI and ML.

However, in annotation projects, the challenge lies in ensuring label quality. This is handled through effective data labeling strategies such as refining annotation schemas, implementing quality control, addressing bias, leveraging automation for scaling the process and optimizing data annotation workflow management.

These tactics and techniques enhance the accuracy and efficiency of your data annotation process, leading to AI applications that can withstand the stress of performing in live environments.

Data annotation involves labeling raw data to provide context and meaning, enabling algorithms to learn and make accurate predictions. A well-defined workflow is crucial for efficient and accurate annotation.

The following are considered key steps in any machine learning data annotation project:

Strategic data labeling and annotation ensures high-quality training data, high model accuracy, better generalization, and overall performance improvement in machine learning projects.

The following are five important strategies used in data annotation projects:

To create high-quality labeled datasets, we need to refine our annotation schemas and ontologies. These provide the structured framework necessary for accurate and meaningful annotations.

Contextual awareness involves capturing relationships between entities, temporal information, and nuanced attributes, such as sentiment and intent. This enriches the data and allows our models to make more informed decisions. For example, annotating dialogs with speaker turns and emotional tone provides a valuable context for natural language processing models.

Ontology enrichment is an important part of quality data annotation in machine learning. It involves building detailed ontologies with hierarchical structures and semantic relationships. For this, we leverage knowledge graphs and semantic web technologies to create a detailed representation of domain knowledge.

Granular label hierarchies capture fine-grained distinctions in data. This enables our models to learn at different levels of abstraction, providing flexibility in data interpretation and usage.

For this, we begin by establishing broad categories and then systematically breaking them down into more specific subcategories. It’s crucial to ensure each level of our hierarchy adheres to the principles of mutual exclusivity and collective exhaustiveness.

The ideal level of granularity is inherently connected to the particular machine learning task we are addressing, so we must customize our hierarchy to fit this need. The hierarchy has to be structured to easily incorporate new concepts and adapt to evolving needs as they emerge.

Hitech BPO delivered accurate image annotations for a Swiss waste assessment company, facilitating precise machine learning training. By segmenting food waste images and incorporating an audit mechanism, they improved data accuracy, helping the client significantly reduce food waste across various food outlets.

Read full case study →Annotate large text datasets for AI/ML with expert precision.

Robust quality control and validation are essential for ensuring the accuracy and reliability of annotated data.

Advanced Metrics and Analysis

Utilize metrics like Inter-Annotator Agreement (IAA) to measure consistency among annotators and Cronbach’s Alpha to assess the reliability of final annotations. IAA ensures uniform understanding and application of guidelines, especially when working with data that requires subjective judgment.

Cronbach’s Alpha validates adherence to data labeling standards. This reliability coefficient ranges from 0 to 1, with higher values indicating greater similarity among final labels.

Statistical Process Control

Implement Statistical Process Control (SPC) to monitor and control the annotation process. Define a protocol for sampling, utilize control charts, and train annotators to identify statistical variances.

By collecting and analyzing statistical data, you ensure that your annotation process stays within pre-defined control limits. This helps maintain the quality and consistency of the annotated data.

Automated Error Detection

Leverage machine learning algorithms for automated error detection. This approach is particularly useful for large-scale projects, where manual review is impractical. Deep learning-based quality assurance techniques help identify error-prone data, highlighting areas requiring human review.

Hitech BPO verified and classified over 10,000 construction articles to enhance AI algorithms for a Germany-based construction technology company. By manually validating complex data and improving auto-classified inputs, they reduced project costs by 50%.

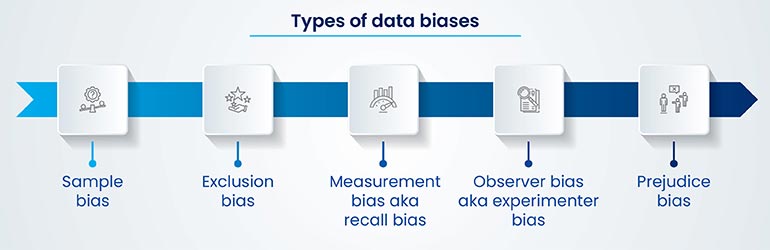

Read full case study →Given the significant impact of machine learning on decision making, it is vital to tackle bias and fairness in data annotation. Robust strategies guarantee that our models maintain accuracy, ethics and impartiality.

Bias Detection with Explainable AI

Explainable AI (XAI) methods, such as SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-agnostic Explanations), offer powerful tools for identifying features that exert a disproportionate influence on model predictions. Understanding these influential features allows us to pinpoint potential sources of bias.

For example, if an XAI method reveals that gender heavily influences loan approval predictions, even after accounting for it in the training data, this signals a potential annotation bias that requires immediate attention. Armed with these insights, we can refine annotation guidelines, resample datasets, or utilize bias mitigation techniques during model training to counteract these biases.

Adversarial Training

Adversarial training enhances model robustness by introducing carefully engineered adversarial examples into the training process. These examples are designed to exploit model vulnerabilities and expose hidden biases. By confronting the model with these challenging examples, we encourage it to make more balanced predictions and reduce reliance on potentially biased features.

For example, consider image recognition tasks where skin tone bias is a concern. Adversarial training allows us to generate images with diverse skin tones while maintaining consistent semantic labels, compelling the model to learn more equitable representations.

Ethical Frameworks

Ethical considerations must be deeply embedded throughout the data annotation machine learning process. Frameworks like those proposed by NIST offer valuable guidance in identifying potential bias sources, defining relevant fairness metrics, and establishing accountability mechanisms.

These frameworks champion a human-in-the-loop approach, advocating for diverse annotation teams and incorporating regular bias audits. This ensures that fairness is not an afterthought, but rather an integral component of the AI system itself.

By incorporating these strategies, we ensure creating fair and unbiased annotations for machine learning models.

Partner with us for customized AI/ML data labeling solutions.

When you’re annotating data for machine learning, it’s crucial to scale and streamline your processes. As your data volume grows, maintaining high-quality annotations becomes increasingly challenging.

To handle the demands of large-scale annotation, leverage automation and AI-driven tools. These tools reduce manual effort and help maintain consistency across large datasets. Remember that selecting the right tool from the many open-source and commercial options is crucial, as your specific data type and annotation task will influence your choice.

Equally important is establishing clear annotation guidelines and instructions. Any ambiguity here will directly translate into inconsistencies in your labeled data, introducing noise that can negatively impact model training. Ensure your guidelines comprehensively cover not only the labeling task itself but also edge cases and how to handle them consistently.

Modern annotation tools offer features that go beyond basic labeling. Features like pre-labeling using pre-trained models or heuristics can significantly accelerate your annotation process. For example, in object detection tasks, a pre-trained model can provide initial bounding boxes that you can then refine, saving significant time and effort.

Finally, integrate automation and scripting to help streamline repetitive tasks within your labeling workflow. This becomes particularly valuable with large-scale datasets where manually labeling every instance is impractical.

Hitech BPO successfully annotated over 1.2 million fashion and home décor images in 12 days for a Californian tech firm, enhancing AI/ML model performance. The team ensured precision through training and customized workflows, increasing annotation productivity by 96% and delivering high-quality, formatted data.

Read full case study →Automated data labeling addresses the challenges of creating large, labeled datasets. By using AI for data labeling, we reduce human error, increase efficiency, and minimize the costs associated with manual labeling.

Transfer Learning for Pre-Labeling

Transfer learning significantly improves the efficiency of data annotation. This technique uses a model trained on one task as a starting point for a new related task. By transferring knowledge from a pre-trained model, we jumpstart the labeling process and achieve better performance with less labeled data.

We use pre-trained models to extract relevant features from the input data, which are then fed into a new classifier specific to our target task. This is particularly useful when labeled data is limited in our domain, but we can leverage knowledge from related domains.

Another technique is fine-tuning, in which we further train a pre-trained model on our specific dataset. This adapts the model to our task while benefiting from its existing knowledge. Fine-tuning is effective for tasks like sentiment analysis, text annotation and summarization.

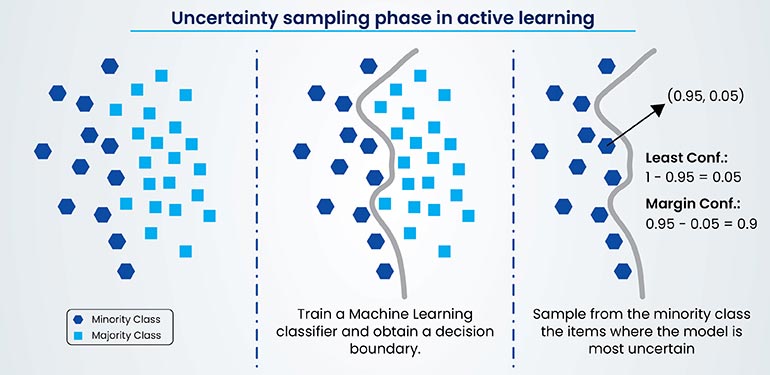

Active Learning with Uncertainty Sampling

Active learning maximizes labeling efforts by intelligently selecting the most informative samples for annotation. Uncertainty sampling, a popular approach, selects examples with the most uncertain predictions.

You can measure uncertainty in several ways:

Focusing on the most uncertain samples can achieve better model performance with fewer labeled examples. This is extremely important when annotation budgets are limited.

Human-in-the-Loop Systems

While automation undoubtedly boosts efficiency, human expertise remains essential to achieving high-quality annotations. Human-in-the-loop (HITL) systems effectively merge automated algorithms with unique human skills.

Essentially, HITL systems involve automated labeling algorithms working in tandem with human annotators. The process is as follows:

This collaborative approach harnesses the speed of automation while upholding the quality that human annotators ensure. HITL systems prove particularly valuable for complex tasks that demand specialized domain expertise, such as image annotation.

Accurate data labels ensure proper training data that enables ML models to identify patterns, make predictions, and generalize to new data. Inaccurate or inconsistent labels introduce noise and bias, hindering model performance and potentially leading to unreliable or even harmful outcomes.

Therefore, continuous improvement and adaptation of labeling strategies are essential for building trustworthy ML models.

As project requirements evolve and models become more sophisticated, labeling strategies must adapt to ensure data remains relevant and accurately reflects the task at hand. This involves refining labeling guidelines, incorporating new data sources or adjusting for concept drift. Following these strategies is crucial to gain a competitive edge in your data annotation for ML projects.

What’s next? Message us a brief description of your project.

Our experts will review and get back to you within one business day with free consultation for successful implementation.

Disclaimer:

HitechDigital Solutions LLP and Hitech BPO will never ask for money or commission to offer jobs or projects. In the event you are contacted by any person with job offer in our companies, please reach out to us at info@hitechbpo.com

Leave a Reply